Pipelines as Code: Getting the Most Out of Gitlab

Choosing a build server is a weighty decision for any software endeavor, since at some point nearly every member of your team is likely to come into contact with it. Finding one that causes the least amount of friction and headaches for everyone is imperative. This article will detail how Gavant, after many years of iteration, came to settle on our current build structure using Gitlab and how we currently use it to best suit our development style.

Why Did We Choose Gitlab

The simplest answer to this question is: we were already using it. Our git repositories had been living in Gitlab since the start of the great migration away from SVN. However, back in those days Gitlab’s build functionality was brand new and not available under the basic installation license. For years, we had a separate standalone build server (TeamCity) instead. But we were outgrowing our old build server. And by happy coincidence that lined up nicely with a Gitlab upgrade, giving us access to the CI/CD pipeline features. So we figured, why not give it a try?

Excellent – we had a perfect excuse to fiddle around with this new feature and put it to use. But why did we stick with it and ultimately migrate all our new projects away from our old build server?

Scalability

Gitlab is free and open source, with no limits on the number of projects or size of your data if you host it entirely on your own servers. This same principle applied to their CI/CD setup. This was a huge win considering we were fast outgrowing our other classic licensed build servers. They charged per-project, per-user, or per-build-agent even if we hosted everything ourselves. The choice here was pretty simple.

Version control of pipelines

You only have to accidentally delete a complex build configuration once to know the pain and dread of not having version control to roll back your changes. There are of course workarounds, like abstracting as much of the build processes as possible into script files that can be committed alongside the project code. But with some of the classic build servers, many settings come baked into the web console UI with no clean way to export them, let alone easily tie them to version control. Gitlab instead features a gitlab-ci.yml settings file which lives right in the project repo. Changes are easy to track since they’re committed just like any other code file. Copying jobs or entire pipelines from project to project also becomes much simpler and less error-prone as a result. While this feature is by no means unique to Gitlab, it’s definitely a significant plus worth noting.

Combined Repo and Build server

The final – and arguably most significant – positive to using Gitlab CI/CD is not having to install and maintain a second application dedicated to builds, including all the server and network infrastructure to go with it. With Gitlab, it’s all in one place. There’s no need to spend hours of dev time trying to get two separate locations to communicate properly, or worry about updating one and subsequently breaking compatibility with the other. From a build-engineering standpoint, this direct connection simplifies access to git events and properties as they apply to your build scripts (which we will discuss further below), and enables easier cross-project dependencies or triggers if so desired.

There was also an unexpected organizational benefit that came with combining the two applications. There is less back-and-forth in reconciling build server events with other incoming code changes and merge requests.

The consolidation of project, group, and permission settings between both areas no doubt helped this process as well, preventing hiccups often caused by small overlooked inconsistencies.

Some Caveats and Considerations

Gitlab turned out to be the main choice for us moving forward. But like anything it is not without hangups and situations where it is much less than an ideal solution.

First, it’s not perfect for all project frameworks. Gitlab’s build-step configurations are little more than open access to a shell terminal on a designated server. This provides great design freedom – from basic organization of build flows to allowing specific dependencies on each build agent. This freedom does, however, come at a cost. Unlike classic dedicated build-server software, Gitlab does not come with built-in features and shortcuts for common framework-specific operations (think Nuget Restores and MSBuild for .NET). For older or more dependency-heavy frameworks, the time saved in application setup and maintenance is outweighed completely by the time it would take to configure a new build agent with all the packages, dependencies, and environment settings needed to simply run a build.

Second, it doesn’t integrate with other repositories (for free). Gitlab is, first and foremost, a git server. It is not designed to manage connections to various source control locations like a stand-alone build server would be. If your architecture has many projects that live in separate flavors of repositories, or is even simply spread out among several instances of git, Gitlab CI/CD is probably not a good fit. It is not meant to be a hub. Most of its advantages lie in the direct connection it has with the project repos.

How Do We Use Gitlab

We weighed our pros and cons, fiddled around with the features, and ultimately decided to set up some project pipelines. But Gitlab literally gives you a blank slate regarding how to design and implement your project flows. This can be incredibly intimidating at first. And without a good amount of forethought, it can easily lead to a convoluted mess that’s arduous to maintain. So how do we use and organize Gitlab’s features to make everyone’s lives a little easier and avoid spaghetti-code’s angsty younger brother spaghetti-build?

Separation of Concerns

This concept is important to pretty much every avenue of programming. But it holds just as much weight with builds and deploys. The idea is to keep various functions as self-contained as possible. You want to avoid piling everything into one long inter-dependent list that can’t easily be read or re-used. This practice is imperative with Gitlab in particular, since it doesn’t provide any sort of default build “steps” or “sections”. To illustrate this, let’s look at a bare-bones job configuration in the .yml.

ci-build:

stage: build

script:

- nvm use 10.17.0

- BuildStamp=$(bash my-script.sh GetStamp)

- mkdir $BuildStamp

- ember build --output-path=$BuildStamp/distThe “script:” section above is simply open access to the command line in the root folder of your project. You have complete freedom regarding what commands you put here. For instance, the “GetStamp” function exists in a separate shell script, so we can easily reuse it many different jobs. Thankfully, it’s pretty straightforward to call shell scripts and functions from here. Just note that the syntax is not well documented (as of the time of this writing) and the paths need to always be relative to the current working directory.

Naming Conventions

This may seem like a no-brainer, or an unnecessary piece of housekeeping, but with Gitlab .ymls in particular, maintaining cohesive naming standards will save you a lot of headaches.

The first point of concern comes with variable names. Environment/configuration variables that are used in the .yml scripts are stored outside the project in the web console settings area. This makes it very easy to overlook a small typo or case difference that would cause a broken build. Further, Gitlab does not (as of yet) support differing values for the same stored variable across environments. So you may easily end up with multiple “copies” of the same variable for each environment. Adopting a naming structure early and sticking with it dramatically reduces the chances for typos and confusion down the line.

The second point is less concerning, and more a way to utilize one of Gitlab’s main strengths – direct access to branch metadata. We can use Gitlab’s “only” setting to determine what kinds of processes need to be run on a given commit based on the branch name. For example, we can restrict production deployments to come from “master”. Or, we can have feature branches picked up for a full build and test sequence.

dev:

stage: lower

script:

- ...

only:

- /^feature\/[0-9]+$/

- masterYou can set these configurations at the individual job level, using most valid regex (see full details here) for very fine-grained control. You can also use them in combination with Merge Request requirements, allowing only reviewed code on a particular branch. It is, of course, not foolproof, but is an excellent step towards preventing accidental deployments of experimental or incomplete features to an inappropriate environment.

Artifact Dependencies

On any project, it is important to ensure that the copy of code which was tested and verified is as identical as possible to what gets deployed to production. With dynamic package-dependent projects, this requires even further scrutiny. In these cases, package-restoration is part of the build process. Each time a build is run, a fresh set of packages is downloaded and compiled into the project.

This seems all well and good during development, as all the required packages are guaranteed to exist and be up-to-date before the program runs. However, if a remote update occurs in one or more of those packages during the interim between final testing and the production build, suddenly when the production build runs it has a newer version of the package and parity has been lost. In this scenario, untested code can unknowingly be dumped straight to production to potentially wreak havoc that is difficult to debug.

To prevent this situation, we build our projects once – immediately upon commit to the git repo. Then, the resulting artifacts pass from job to job with all external packages included. This approach is more taxing on our build servers both in terms of space and processing. But it’s a worthy tradeoff to reduce the risk of these third-party bugs being introduced into our live environments. In the .yml, this is achieved by defining stages which by default force a dependency chain from one to the other.

stages:

- build

- lower

ci-build:

stage: build

script:

- ember build --output-path=deployment/dist

artifacts:

name: "artifact-$CI_PIPELINE_ID"

paths:

- deployment/dist

dev:

stage: lower

script:

- bash my-script.sh Deploy artifact-$CI_PIPELINE_IDIn this example, the “lower” stage is dependent upon the “build” stage purely via their order in the stages list, so the artifacts from the “ci-build” job will be automatically passed to the “dev” job. Further, the “dev” job will not be available until the “ci-build” succeeds. Anything not designated as an artifact will be wiped from the build directory once a new job begins, so be sure to pack up everything that’s needed.

Note the variable with the prefix “$CI_”. This is a global Gitlab variable containing the current build ID. There are a whole slew of useful metadata options like this available to the .yml configuration (see full list here).

Autogenerated Lower Environments

If your infrastructure allows for it, creating temporary environments for individual branches is a great way to ease the pain of developers accidentally stepping on each other’s toes when working on separate features.

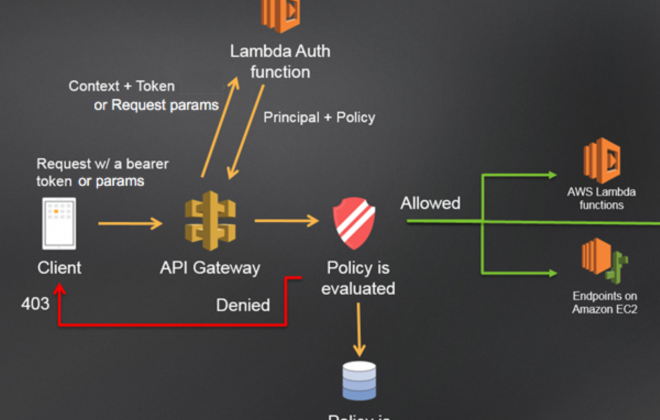

To help facilitate this, Gitlab’s Environments feature allows you to publish available environments to the Operations section of the web console site. The names and URLs for each environment can be dynamic, based upon regular CI or global variables. These settings can also be published after a deploy job completes, which is handy in cases where auto-generated/random values from the deploy determine the resulting URL ( we ran into this exact situation using AWS API Gateway).

To publish an environment, simply add the following section to your job configuration. This example uses the branch name as base for the name and URL:

environment:

name: temp-envt-$CI_COMMIT_BRANCH

url: https://my-dev-$CI_COMMIT_BRANCH.comTo instead use a dynamic value created during the deploy process, you’ll need to write the value to a special artifact file referred to as “dotenv”:

dev:

stage: lower

script:

- MyUrl=$(bash my-envt-create.sh)

- echo "AutoCreatedUrl=https://$MyUrl" >> post-deploy.env

environment:

name: temp-envt-$CI_COMMIT_BRANCH

url: $AutoCreatedUrl

artifacts:

reports:

dotenv: post-deploy.envThis example grabs the url output from “my-envt-create.sh” and writes it to a text file called “post-deploy.env”; the designated dotenv artifact. Gitlab will automatically pick up on this file once the job is complete and publish it to the operations page on the console site.

And voila! That’s it. No more digging through web servers or logs to find the autogenerated dev environments and write them down somewhere. This feature is very new and there are still a few caveats to using it (see full details here).

Auto Teardown

Finally, my favorite discovery in Gitlab CI so far is the ability to hook into the git “branch-deleted” event. I’ve yet to see this in other standalone build servers, since they usually only listen for “push” events. Currently, this feature is tightly coupled with the “environment” feature discussed above and it does not work as a standalone. But it’s still very useful for running cleanups once a branch and/or feature is complete.

Any job that is assigned to an environment can be configured to listen for a branch-deleted event. This is known as on_stop in the Gitlab world. Simply assign a second cleanup job as the “on_stop” action, which will then be called when the corresponding branch is deleted. See the illustration below:

deploy:

stage: lower

when: manual

environment:

name: lower-$CI_COMMIT_BRANCH

url: https://my-dev-$CI_COMMIT_BRANCH.com

on_stop: teardown

script:

- do your deploys here

teardown:

stage: lower

when: manual

environment:

name: lower-$CI_COMMIT_BRANCH

action: stop

variables:

GIT_STRATEGY: none

script:

- do your cleanup hereIt is a little counterintuitive at first, since the teardown is called from the previous deployment job rather than vice-versa. But it does make sense, since you don’t want to run a teardown every time a random branch is deleted if it never had a deployed environment in the first place.

Some important notes:

- The environment names across both jobs must match exactly, or the teardown won’t run

- The GIT_STRATEGY for the teardown must be set to none, or else the job will try to pull files from a branch that no longer exists and will break.

- Clicking the “Stop” button on an environment will trigger the “on_stop” event, the same as if you had deleted the branch, so be wary of that.

Finding this feature has been a huge time saver for me personally. I no longer have to track down old temp environments and delete them manually to save space on our servers. It also makes it much easier to debug server/config issues, since there’s no more wading through the remnants of stale environments to find the current one.

In Conclusion

Choosing and implementing a solid build process is a complex matter, since every situation is going to have unique needs. But I hope this overview has shown that it need not be quite as intimidating as it would first seem. There are many avenues to consider. But most common problems have plenty of tools and workaround options to help smooth out the bumps. Even when considering an open framework like Gitlab, attention is needed in design and scripting. But, you can start small and have something to experiment with fairly quickly.

More important than choosing a particular suite of software is fleshing out your own processes that work for your team. Then it’s a matter of matching that up as best you can with the tools that are available to you. Happy Building!

Strategy for Modernizing Monolithic Applications

Strategy for Modernizing Monolithic Applications

The "Frankenstein" Cloud Software Application

The "Frankenstein" Cloud Software Application

A Guide to Custom Software Development

A Guide to Custom Software Development